The Unseen Revolution: How Generative AI is Reshaping Investigative Journalism – Ethical Crossroads, Data Integrity, and Future Frontiers

The relentless pursuit of truth is the bedrock of investigative journalism. For centuries, this pursuit has relied on human ingenuity, tireless research, and unwavering dedication. Today, a new force is emerging, one that promises to both amplify these efforts and challenge their very foundations: Generative Artificial Intelligence (AI). From sifting through mountains of data to drafting preliminary reports, AI is rapidly moving from a theoretical concept to a practical tool in the journalist’s arsenal. This technological leap, however, is not without its complexities. It introduces a fascinating dichotomy, offering unprecedented opportunities for uncovering hidden truths while simultaneously raising profound ethical dilemmas and demanding stringent new standards for data accuracy.

As newsrooms grapple with dwindling resources and an ever-accelerating news cycle, generative AI tools, such as large language models (LLMs) and advanced image/video synthesis, present a compelling proposition. They can automate mundane tasks, identify patterns in vast datasets that would overwhelm human analysts, and even assist in crafting narrative structures. Yet, with this power comes immense responsibility. The potential for AI to introduce bias, fabricate information (hallucinations), or create convincing deepfakes threatens to erode public trust in journalism at a time when it’s most needed. This comprehensive exploration delves into the transformative impact of generative AI on investigative journalism, meticulously examining the ethical considerations it introduces, the critical importance of maintaining data accuracy, and the innovative future that awaits this vital field. We will uncover how journalists can harness AI’s power responsibly, ensuring that the quest for truth remains paramount in an increasingly AI-driven world.

Generative AI: A New Frontier for Uncovering Truths in Investigative Journalism

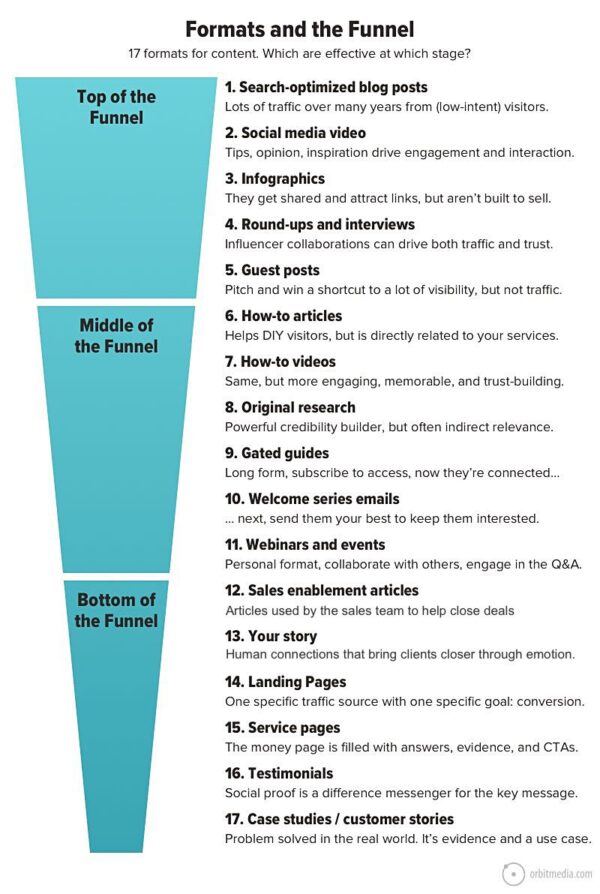

Generative AI, with its capacity to create new content and identify complex patterns, is fundamentally altering the investigative workflow. It empowers journalists to tackle investigations of unprecedented scale and complexity, democratizing access to analytical power previously reserved for specialized data scientists. This shift is not about replacing human intuition but augmenting it, allowing reporters to focus on the nuanced storytelling and critical verification that only humans can provide.

Automating Data Sifting and Analysis

One of the most significant contributions of generative AI to investigative journalism lies in its ability to process and analyze colossal datasets. Traditional investigative reporting often involves sifting through thousands, sometimes millions, of documents – financial records, government reports, emails, legal filings, and social media posts. This process is time-consuming, prone to human error, and often requires specialized skills.

- Rapid Document Review: Generative AI models can quickly summarize lengthy documents, extract key entities (names, organizations, dates, locations), and identify relationships between them. For instance, an AI could analyze hundreds of Freedom of Information Act (FOIA) responses, pinpointing mentions of specific keywords or individuals that warrant further human investigation.

- Pattern and Anomaly Detection: Beyond simple keyword searches, AI can uncover subtle patterns and anomalies that indicate potential wrongdoing. In financial investigations, AI might identify unusual transaction volumes, hidden shell companies, or unexplained transfers of wealth across vast ledgers. In environmental reporting, it could correlate pollution data with industrial permits to flag potential violations.

- Network Mapping: AI can construct intricate networks from unstructured data, mapping relationships between individuals, corporations, and political entities. This is invaluable for exposing corruption networks, identifying beneficial owners of opaque companies, or tracking the spread of disinformation campaigns.

- Synthesizing Information: AI can synthesize information from disparate sources – academic papers, news archives, government databases – to provide a coherent overview of a topic, person, or organization. This helps journalists quickly grasp complex issues and identify knowledge gaps.

- Generating Initial Drafts of Background Information: While not for final publication, AI can generate initial drafts of background sections, profiles, or summaries of complex reports. This frees up human journalists to conduct interviews, verify facts, and pursue original leads.

- Identifying Gaps and Contradictions: By cross-referencing information from multiple sources, AI can flag inconsistencies or missing pieces of information, prompting journalists to dig deeper into specific areas.

- Brainstorming and Ideation: AI can suggest different angles for a story, generate potential headlines, or even outline narrative structures based on the data provided. This helps journalists overcome writer’s block and explore diverse storytelling approaches.

- Drafting Routine Elements: For certain types of investigative reports, such as those relying heavily on data analysis, AI can draft routine sections like methodology explanations, statistical summaries, or factual descriptions of events, leaving journalists to focus on the human impact and narrative flow.

- Perpetuating Stereotypes: An AI trained on historical news archives might inadvertently perpetuate stereotypes about certain demographic groups if those stereotypes were prevalent in the original data. This could lead to biased reporting or overlook crucial perspectives.

- Algorithmic Discrimination: In data analysis for investigations, biased AI could inadvertently flag individuals or groups based on discriminatory patterns rather than actual wrongdoing, leading to unjust scrutiny.

- The “Black Box” Problem: Many advanced AI models operate as “black boxes,” meaning their decision-making processes are opaque and difficult to interpret. This lack of explainability makes it challenging to identify and mitigate bias, posing a significant challenge for accountability in journalism.

- Disclosing AI’s Role: Journalists should clearly attribute AI’s involvement, whether it was used for data analysis, drafting background sections, or generating visualizations. This could be through specific disclaimers, metadata, or clear statements within the article.

- Avoiding Misleading Content: The line between human-generated and AI-generated content can blur, especially with sophisticated LLMs. Journalists must ensure that readers understand the origin of information and are not led to believe AI-assisted content is purely human creation.

- Watermarking and Metadata: Implementing technical solutions like digital watermarks or embedded metadata can help identify AI-generated images, audio, or video, providing a verifiable trail of provenance.

- Fabricated Evidence: Deepfakes can be used to fabricate evidence, creating convincing but entirely false recordings of individuals saying or doing things they never did. This could be used to discredit sources, manipulate public opinion, or sow widespread disinformation.

For example, a team investigating a municipal corruption scandal might feed thousands of city contracts, emails, and financial disclosures into an AI. The AI could then highlight unusual bid patterns, frequent communication between contractors and specific officials, or payments to shell companies that appear to be connected. This doesn’t replace the journalist’s work; it provides a highly targeted starting point for deeper investigation.

Enhancing Research and Backgrounding

Before an investigative story can even begin, extensive background research is required. Generative AI significantly streamlines this phase, allowing journalists to gain a comprehensive understanding of a subject much faster than before.

Imagine a journalist researching a new corporate scandal. An AI could rapidly compile a dossier on the company’s history, key executives, previous legal issues, and financial performance, drawing from public records and news archives. This allows the reporter to arrive at interviews with a much stronger foundational understanding.

Content Generation and Storytelling Support

While the idea of AI writing entire investigative pieces is contentious and largely undesirable for ethical and accuracy reasons, generative AI can serve as a powerful assistant in the content creation process.

Personalized Content Delivery (Contextual): While less direct for core investigative reporting, AI can help tailor the presentation* of findings to different audiences or platforms, suggesting optimal formats for data visualizations or interactive elements that enhance understanding.

It’s crucial to emphasize that AI’s role here is supportive. The ultimate narrative, the critical analysis, and the ethical framing of an investigation must always remain firmly in human hands. Generative AI is a tool to enhance efficiency and explore possibilities, not to dictate the story itself.

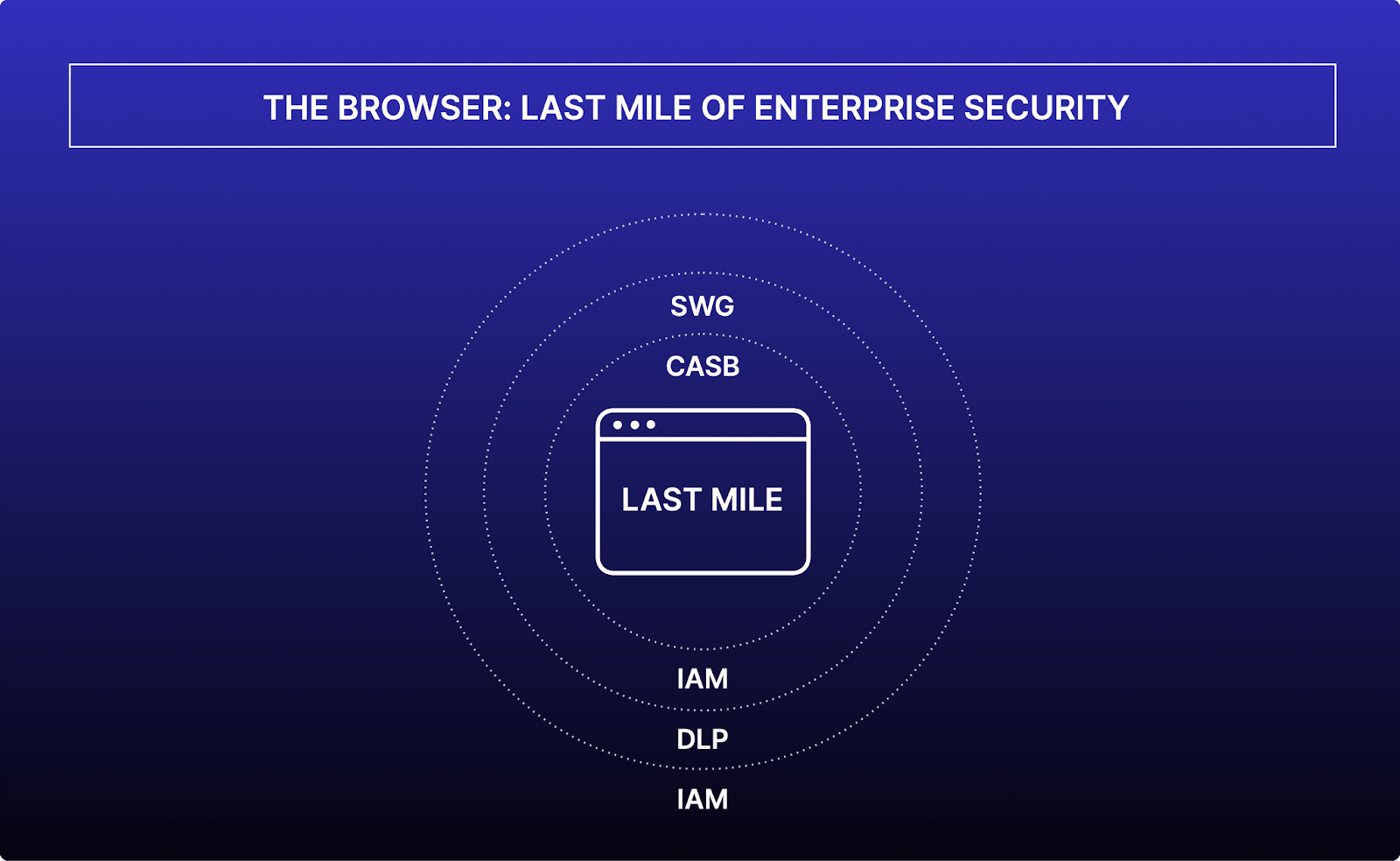

Navigating the Ethical Labyrinth: Critical Considerations for AI in Journalism

The integration of generative AI into investigative journalism, while promising, is fraught with significant ethical challenges. Addressing these concerns proactively is paramount to maintaining public trust and upholding the integrity of the journalistic profession. Without careful consideration and robust safeguards, AI could inadvertently undermine the very principles it’s meant to serve.

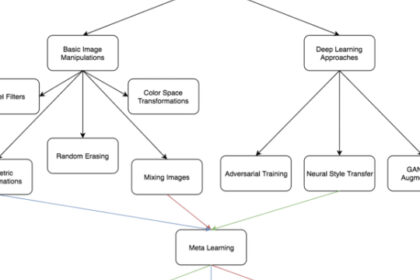

Bias and Fairness in AI Models

One of the most pressing ethical concerns is the inherent bias embedded within AI models. Generative AI learns from vast datasets, which often reflect existing societal biases, inequalities, and historical prejudices. If the training data is skewed, the AI’s outputs will inevitably be skewed.

Actionable Advice: To combat bias, news organizations must prioritize diverse and representative training data, conduct regular bias audits of their AI tools, and ensure human oversight at every stage of AI-assisted investigation. Transparency about the limitations and potential biases of AI tools is also vital.

Transparency and Attribution

The use of generative AI in journalism necessitates clear transparency. Readers have a right to know when and how AI has been used in the creation or analysis of journalistic content. Failing to disclose AI’s involvement can mislead audiences and erode trust.

Key Takeaway: Transparency is not just an ethical obligation; it’s a cornerstone of maintaining credibility in the age of AI-assisted journalism.

The Deepfake Dilemma: Disinformation and Authenticity

Perhaps the most alarming ethical challenge posed by generative AI is its capacity to create highly realistic “deepfakes” – synthetic audio, video, and images that are virtually indistinguishable from genuine content. This poses an existential threat to the concept of verifiable evidence, a core component of investigative journalism.

*Challenges in