Investigative journalism stands as a cornerstone of democratic societies, relentlessly pursuing truth, holding power accountable, and shedding light on hidden injustices. In an era defined by information overload and rapid technological advancement, the tools available to journalists are evolving at an unprecedented pace. Among these, Generative Artificial Intelligence (AI) has emerged as a particularly potent, albeit complex, force. From automating tedious data analysis to drafting preliminary reports, AI promises to revolutionize how journalists uncover and present critical stories. However, this transformative potential is not without its intricate challenges.

- Generative AI’s Role in Modern Investigative Journalism: A Paradigm Shift

- Enhancing Data Collection and Analysis for In-Depth Investigations

- Automating Content Generation and Report Drafting

- Revolutionizing Open-Source Intelligence (OSINT) Gathering

- Navigating the Ethical Minefield: Generative AI and Journalistic Integrity

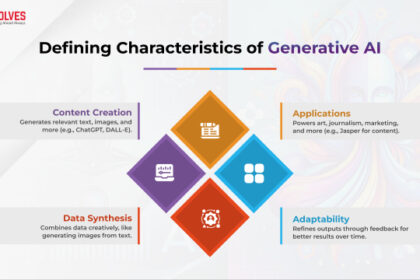

The integration of Generative AI into investigative journalism brings forth a myriad of ethical considerations, demanding careful navigation to uphold journalistic integrity and public trust. Questions surrounding data accuracy, algorithmic bias, and the potential for synthetic media to mislead audiences are paramount. This comprehensive article delves into the profound impact of Generative AI on investigative journalism, meticulously examining the ethical dilemmas it presents, the critical need for data veracity, and the innovative pathways it opens for the future of truth-seeking. As newsrooms worldwide grapple with the implications, understanding this evolving landscape is crucial for both practitioners and the public they serve.

Generative AI’s Role in Modern Investigative Journalism: A Paradigm Shift

The application of Generative AI within investigative journalism marks a significant departure from traditional methodologies, offering capabilities that enhance efficiency, expand scope, and deepen insights. This technological integration is not merely about automation; it represents a paradigm shift in how journalists interact with information, uncover hidden connections, and construct narratives.

Enhancing Data Collection and Analysis for In-Depth Investigations

One of the most immediate and impactful applications of Generative AI in investigative journalism lies in its unparalleled ability to process and analyze vast quantities of data. Traditional investigations often involve sifting through mountains of documents, public records, financial statements, social media feeds, and communication logs – a time-consuming and resource-intensive endeavor for human journalists.

Generative AI, particularly through advanced Natural Language Processing (NLP) models, can automate the synthesis of information from disparate, unstructured datasets. It can identify patterns, anomalies, and critical connections that might otherwise remain hidden within the noise. For instance, an AI system could ingest thousands of corporate financial reports, flagging unusual transactions or complex ownership structures indicative of potential fraud. Similarly, analyzing public procurement databases, AI can highlight discrepancies in bidding processes or identify recurring patterns of contracts awarded to specific entities, pointing towards potential corruption.

Consider a scenario where an investigative team is probing an alleged network of shell companies involved in money laundering. Manually tracing the ownership, transactions, and legal filings across multiple jurisdictions would take months, if not years. An AI assistant, however, can rapidly cross-reference company registries, bank records, and public statements, visualizing the complex web of connections in a fraction of the time. This frees up human journalists to focus on the qualitative aspects of the story – interviewing sources, verifying facts, and crafting compelling narratives – rather than being bogged down by sheer data volume.

The ability of Generative AI to distill actionable intelligence from overwhelming data sets is a game-changer for complex investigations. It transforms the investigative process from a largely manual, linear effort into a dynamic, AI-augmented exploration.

Automating Content Generation and Report Drafting

Beyond analysis, Generative AI also possesses the capability to assist in the content creation phase of investigative journalism. While the idea of AI drafting entire investigative reports might seem futuristic or even concerning, its current utility lies in automating more routine or preliminary aspects of content generation.

For example, after a journalist has gathered and verified key facts, an AI model can generate initial drafts of background sections, summaries of findings, or even standardized reports for routine disclosures. This can be particularly useful for localizing national stories, tailoring complex information for specific demographics, or translating intricate legal or scientific jargon into more accessible language for a general audience. An AI could summarize court documents, medical reports, or scientific studies, extracting key points and presenting them in a digestible format, allowing the journalist to then build upon this foundation with their unique insights and narrative flair.

This automation extends to generating various content formats, from bullet-point summaries for quick comprehension to detailed descriptive paragraphs. It can help journalists quickly produce different versions of a story for various platforms – a short social media post, a concise news alert, and a longer-form article – all based on the core verified information. This significantly boosts productivity and allows journalists to disseminate information more rapidly and broadly.

Revolutionizing Open-Source Intelligence (OSINT) Gathering

Open-Source Intelligence (OSINT) has become an indispensable tool for investigative journalists, relying on publicly available information to uncover facts. Generative AI elevates OSINT gathering to new heights, offering sophisticated capabilities for sifting through the vastness of the internet and public digital spaces.

AI-powered tools can monitor social media platforms, forums, public databases, and dark web discussions, identifying relevant conversations, trends, and potential leads. For visual investigations, AI can analyze images and videos for authenticity, cross-reference facial recognition data (where ethically permissible and legally compliant), geolocate footage, and detect signs of manipulation or alteration. This is crucial for verifying the veracity of user-generated content often shared during crises or conflicts.

For instance, an investigative team looking into human rights abuses might use AI to scour satellite imagery for evidence of mass graves or destroyed infrastructure, correlate this with social media posts, and analyze witness testimonies for inconsistencies. AI can also perform rapid cross-referencing of information from disparate sources – comparing official statements with leaked documents, or public records with private testimonies – to build a more comprehensive and robust picture. This capability is particularly vital in an age where information warfare and disinformation campaigns are rampant, making robust verification more critical than ever.

Leveraging Generative AI for OSINT allows journalists to cast a wider net and process more diverse forms of data, leading to more thorough and evidence-based investigations. For further insights into these powerful techniques, consider exploring [Internal Link: Advanced OSINT Techniques for Journalists].

Navigating the Ethical Minefield: Generative AI and Journalistic Integrity

The advent of Generative AI, while offering immense potential, also introduces a complex web of ethical challenges that demand meticulous attention from investigative journalists. Upholding journalistic integrity, maintaining public trust, and adhering to core ethical principles become even more critical in an AI-augmented newsroom.

The Peril of Deepfakes and Synthetic Media in Reporting

Perhaps one of the most immediate and alarming ethical concerns is the proliferation of deepfakes and other forms of synthetic media. Generative AI can create highly realistic, yet entirely fabricated, images, audio, and video that are virtually indistinguishable from genuine content. This poses a profound threat to the very foundation of investigative journalism: the pursuit of verifiable truth.

Imagine an investigation relying on video evidence, only to discover that key footage has been subtly altered or entirely manufactured by AI. The ability to convincingly fake voices, facial expressions, and entire scenarios can be weaponized to discredit sources, spread misinformation, or even frame individuals. For journalists, the challenge is twofold: preventing the accidental use of deepfakes in their own reporting and effectively debunking them when they are used by malicious actors.

The erosion of public trust is a significant risk. If audiences begin to doubt the authenticity of all visual and audio evidence, the credibility of journalism as a whole could suffer irreparable damage. Newsrooms must invest in advanced deepfake detection tools, implement rigorous verification protocols, and educate their staff on identifying synthetic media. Furthermore, transparently labeling AI-generated or AI-assisted content (even if factual) may become a necessary ethical standard to maintain audience trust. To learn more about the evolving landscape of synthetic media, consider consulting resources from organizations like [External Link: Reputable organization like First Draft News or Poynter Institute on deepfakes].

Bias, Transparency, and Accountability in AI Algorithms

Generative AI models are trained on vast datasets, and if these datasets contain historical biases, the AI will inevitably learn and perpetuate those biases. This can manifest in skewed reporting, overlooking certain demographics, or reinforcing harmful stereotypes. For investigative journalism, which often seeks to expose systemic injustices, biased AI outputs can severely undermine the mission.

The “black box” problem – where the decision-making process of complex AI algorithms is opaque and difficult to interpret – adds another layer of ethical complexity. If an AI system flags certain