In an era defined by rapid technological advancement, Generative Artificial Intelligence (AI) has emerged as a transformative force, reshaping industries from creative arts to scientific research. Its profound capabilities, particularly in content generation, data synthesis, and pattern recognition, are now profoundly impacting the landscape of investigative journalism. This powerful technology offers unprecedented opportunities for uncovering complex truths, analyzing vast datasets, and streamlining reporting processes. However, this revolution comes with a new set of challenges, demanding careful consideration of ethical boundaries, the imperative of data accuracy, and a clear vision for future innovations.

Investigative journalists, traditionally reliant on meticulous human effort, extensive interviews, and painstaking document review, are now exploring how AI can augment their core mission: holding power accountable and informing the public. From automating the sifting of millions of financial records to identifying subtle disinformation campaigns, Generative AI promises to extend the reach and efficiency of investigations. Yet, the very tools that offer such potential also introduce risks of bias, misinformation, and the erosion of public trust if not handled with the utmost care and transparency. This comprehensive article delves into the multifaceted impact of Generative AI on investigative journalism, examining the critical ethical considerations, strategies for ensuring data accuracy, and the exciting future innovations poised to redefine the pursuit of truth.

Understanding Generative AI: A New Frontier for Journalism

Generative AI refers to a class of artificial intelligence models capable of producing new content, such as text, images, audio, and video, that is often indistinguishable from human-created output. Unlike traditional AI that primarily analyzes or classifies existing data, generative models like Large Language Models (LLMs) are trained on massive datasets to learn patterns and structures, enabling them to generate novel information. This capability holds immense promise for investigative journalism, a field historically resource-intensive and time-consuming.

The core of Generative AI’s appeal lies in its ability to process and synthesize information at a scale and speed impossible for human journalists alone. Consider the sheer volume of public records, leaked documents, social media chatter, and financial disclosures that an investigative team might face. AI can act as a powerful assistant, sifting through this digital haystack to identify potential leads, anomalies, and connections that might otherwise remain hidden. This shift isn’t about replacing journalists but about empowering them with tools to enhance their investigative prowess.

How Generative AI Tools Are Changing Investigative Workflows

Generative AI applications are already being integrated into various stages of investigative journalism, offering distinct advantages. These tools can perform tasks that traditionally consumed significant human resources, freeing journalists to focus on higher-level analysis, source development, and nuanced storytelling. The efficiency gains are substantial, allowing for deeper and more expansive investigations within tighter deadlines.

One significant application is in data synthesis and summarization. AI can quickly digest lengthy reports, legal documents, or transcripts, extracting key facts, identifying recurring themes, and generating concise summaries. This accelerates the initial research phase, allowing journalists to grasp complex issues much faster. For instance, analyzing hundreds of corporate filings to spot unusual transactions or political donations can be drastically streamlined.

Another key area is pattern recognition and anomaly detection. Generative AI, especially when combined with machine learning techniques, can identify subtle patterns or deviations within massive datasets that humans might overlook. This is invaluable in financial investigations, detecting fraud, or uncovering networks of influence. Imagine an AI sifting through millions of email communications to flag unusual communication patterns between individuals of interest.

Furthermore, AI can assist in content generation for internal purposes, such as drafting initial research briefs, structuring interview questions based on gathered data, or even generating preliminary outlines for investigative reports. While the final narrative must always be crafted by human journalists, these AI-generated drafts can serve as valuable starting points, accelerating the writing process.

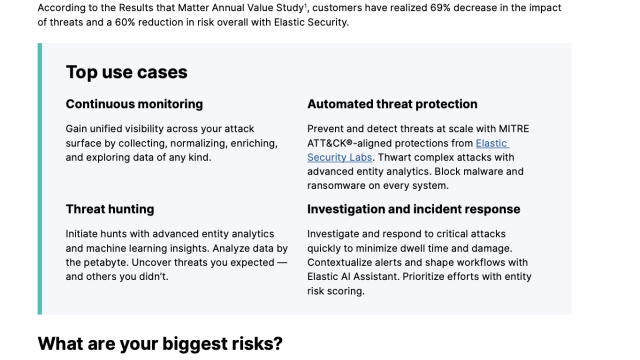

- Data Sifting & Analysis: Rapidly review millions of documents, emails, and financial records.

- Pattern Identification: Uncover hidden connections, anomalies, and fraudulent activities in large datasets.

- Initial Research & Summarization: Condense complex reports and identify key facts from vast information pools.

- Transcription & Translation: Accurately transcribe audio/video and translate documents, breaking language barriers.

- Hypothesis Generation: Suggest potential lines of inquiry based on data correlations.

- Bias in Training Data: AI models can inherit and amplify societal biases.

- Algorithmic Opacity: Understanding how AI arrives at conclusions can be challenging.

- Transparency to Readers: Disclose AI usage in reporting processes.

- Human Oversight: Maintain ultimate responsibility for accuracy and ethical standards.

- Combating Misinformation: Develop strategies to identify and debunk AI-generated falsehoods.

- Human in the Loop: AI should always be a tool to assist, not replace, human judgment. Every AI-generated output, lead, or insight must be reviewed and verified by a human journalist.

- Transparency and Disclosure: Clearly inform readers when AI has been used in data analysis, content generation, or other aspects of an investigation. For example, a disclaimer like “AI tools were used to analyze financial documents, with human oversight and verification” could be employed.

- Bias Detection and Mitigation: Actively work to identify and mitigate biases in AI models and their outputs. This includes understanding the training data, testing for discriminatory outcomes, and diversifying the teams developing and using these tools.

- Source Verification: AI-generated leads or summaries should never replace traditional source verification. Every piece of information derived from AI must be cross-referenced with primary sources and validated through established journalistic practices.

- Ethical Sourcing of Data: Ensure that any data used to train or operate AI tools is obtained ethically and legally, respecting privacy laws and intellectual property rights.

- Accountability: News organizations and individual journalists remain fully accountable for the accuracy, fairness, and ethical implications of all published content, regardless of AI involvement.

- Continuous Learning: The AI landscape is evolving rapidly. Journalists must commit to continuous learning about AI capabilities, limitations, and emerging ethical challenges.

- Grounding AI in Verified Data: Instead of allowing AI to freely generate content, restrict its input to a specific, verified dataset. For example

Ethical Considerations: Navigating the Moral Landscape

The introduction of Generative AI into investigative journalism, while promising, ushers in a complex array of ethical considerations that demand rigorous attention. The very power of these tools to create, synthesize, and analyze information at scale also carries significant risks that could undermine journalistic integrity and public trust. Journalists and news organizations must proactively establish robust ethical frameworks to guide the responsible adoption of AI.

The core ethical dilemma revolves around the tension between efficiency and responsibility. While AI can undoubtedly make investigations faster and more comprehensive, its outputs are not infallible and can inadvertently perpetuate or create inaccuracies. Maintaining transparency, ensuring accountability, and guarding against bias are paramount to preserving the foundational principles of journalism.

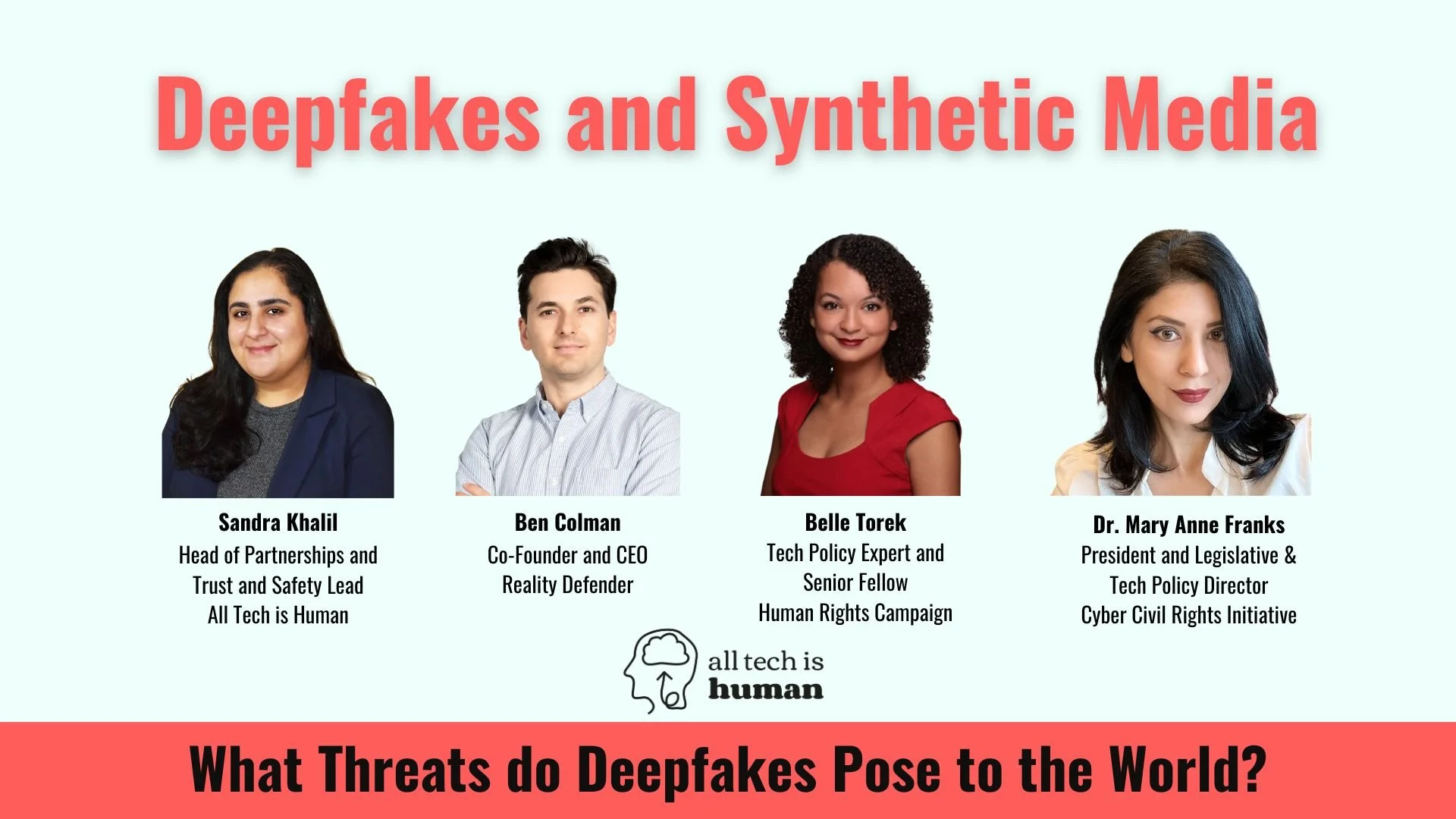

The Challenge of Deepfakes and Synthetic Media

Perhaps one of the most pressing ethical challenges posed by Generative AI is the proliferation of deepfakes and other forms of synthetic media. Deepfakes are AI-generated or manipulated videos, audio recordings, or images that depict individuals saying or doing things they never did. These highly realistic fabrications pose an existential threat to truth and trust, particularly in investigative reporting where visual and auditory evidence is often crucial.

An investigative journalist might encounter a deepfake designed to discredit a source, plant false evidence, or manipulate public opinion. Distinguishing genuine evidence from sophisticated fakes requires advanced technical skills and specialized tools, which are not yet universally accessible. The potential for malicious actors to weaponize deepfakes to sow confusion and undermine legitimate investigations is immense.

Case Study: In 2022, a deepfake video of Ukraine’s President Volodymyr Zelenskyy surfaced, appearing to show him surrendering to Russia. While quickly debunked, it highlighted the immediate danger of AI-generated misinformation during critical geopolitical events. Journalists must be equipped to identify and contextualize such fabrications, a task made increasingly difficult as AI generation quality improves.

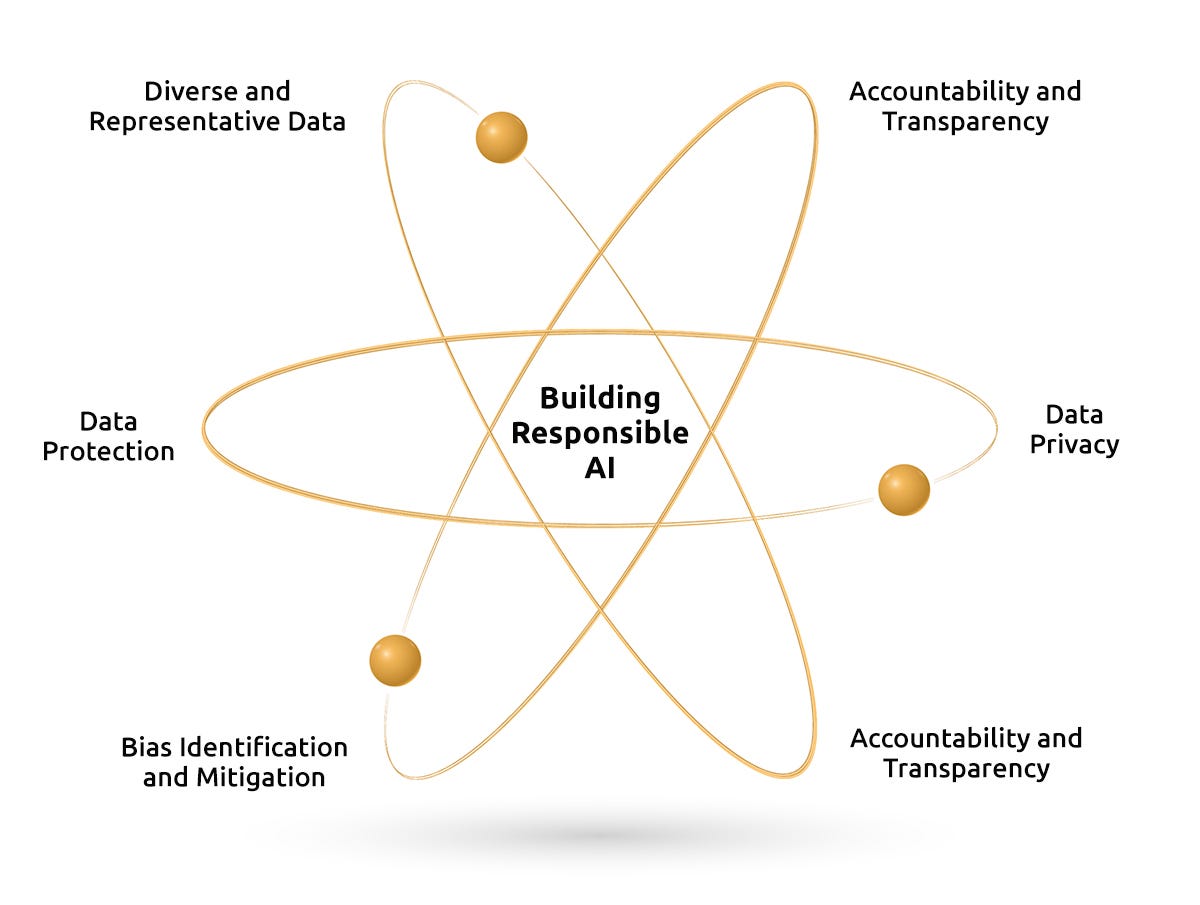

Bias, Transparency, and Accountability in AI Outputs

Generative AI models are trained on vast datasets, and these datasets inevitably reflect the biases present in the real world. If the training data contains historical biases, stereotypes, or underrepresented perspectives, the AI’s outputs will likely perpetuate and even amplify these biases. This is a critical concern for investigative journalists, whose mission is to expose injustice and provide an unbiased account of events.

For example, an AI tool used to analyze crime statistics might inadvertently reinforce existing racial biases in policing if its training data predominantly features certain demographics in negative contexts. An AI generating summaries of public sentiment might miss nuances or misrepresent opinions from marginalized communities if those voices are underrepresented in its training data.

Transparency regarding the use of AI is non-negotiable. News organizations must clearly disclose when AI tools have been used in their reporting, particularly when AI has contributed to data analysis, content generation, or fact-checking processes. This transparency helps build and maintain public trust, allowing audiences to understand the provenance of the information they consume.

Accountability for AI-generated errors or biases ultimately rests with the human journalists and news organizations. The “black box” nature of some AI models, where the decision-making process is opaque, complicates this. Journalists must understand the limitations of the AI tools they employ, rigorously verify all AI-generated leads and content, and be prepared to take full responsibility for the final published work.

Ethical Guidelines for AI in Journalism

To navigate these challenges, the journalism industry needs to develop and adopt clear ethical guidelines for the use of Generative AI. These guidelines should emphasize human oversight, transparency, accountability, and a commitment to accuracy.

Data Accuracy: The Cornerstone of Credibility

In investigative journalism, data accuracy is not merely a best practice; it is the bedrock of credibility. Without verifiable facts, investigations lose their power to inform, persuade, and hold power to account. The integration of Generative AI, while offering unprecedented analytical capabilities, introduces new vectors for error and demands an even more rigorous commitment to data verification. The complex nature of AI models means that errors can be subtle, systemic, and difficult to detect without proper protocols.

Ensuring data accuracy in an AI-assisted investigation requires a multi-layered approach that combines technological vigilance with traditional journalistic skepticism. It means understanding the provenance of data, the limitations of AI models, and the critical role of human oversight in validating every piece of information that contributes to a published story.

Mitigating Hallucinations and Fabrications

One of the most concerning phenomena associated with Generative AI, particularly Large Language Models (LLMs), is “hallucination.” This refers to the AI generating information that is plausible-sounding but factually incorrect or entirely fabricated. These hallucinations are not intentional lies but rather an artifact of the AI’s probabilistic nature, where it generates the most statistically likely output based on its training data, sometimes without a grounding in factual reality.

For investigative journalists, a hallucination can be catastrophic. An AI might confidently generate a false statistic, attribute a quote to the wrong person, or invent a non-existent document. If unchecked, such fabrications can severely damage a journalist’s reputation and undermine the integrity of an investigation.

Strategies to Mitigate Hallucinations: